What is MCP - Model Context Protocol? [Lesson 1]

MCP - Explained for Humans.

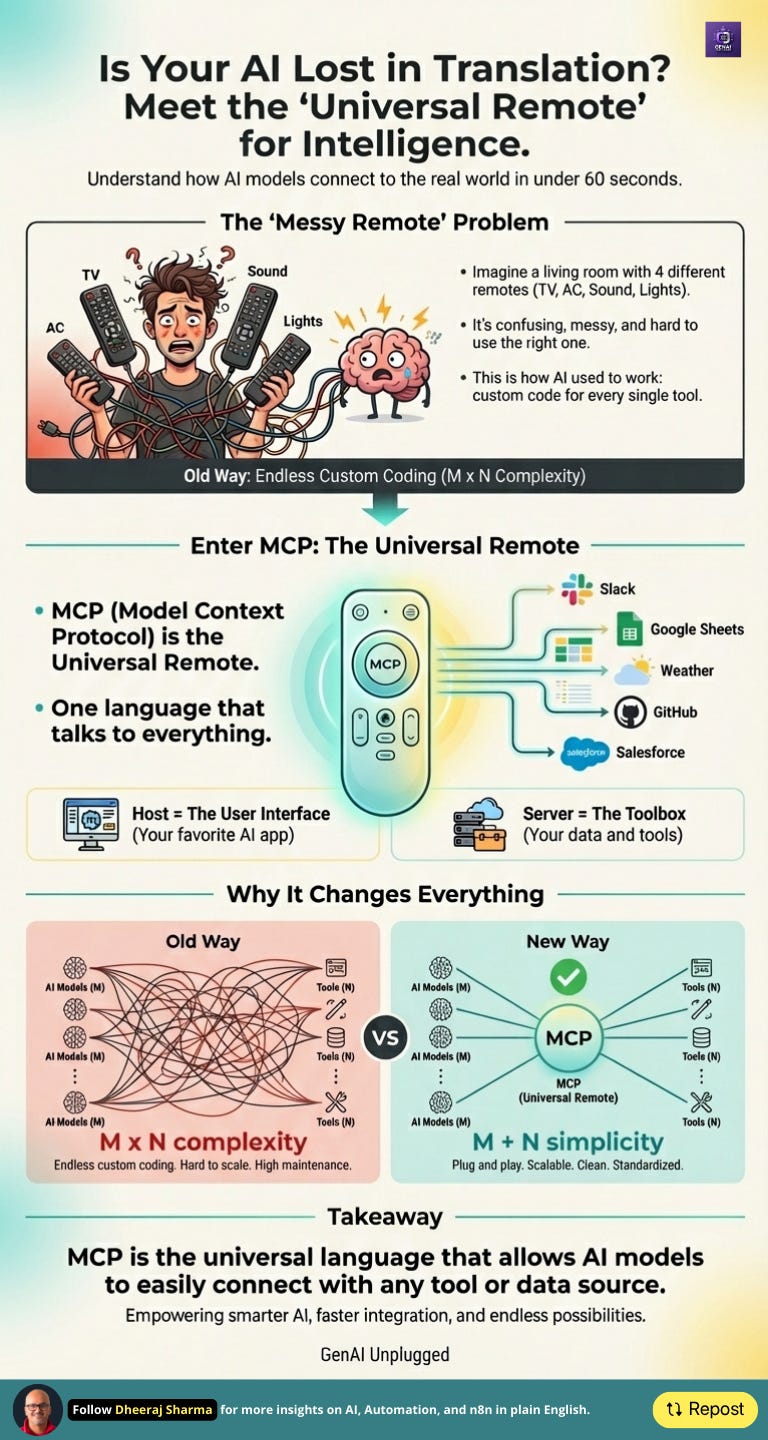

Every few months, a new AI application claims it can ‘connect to everything.’ But how do these AI models actually talk to other apps, databases, or websites?

The answer is something called MCP = Model Context Protocol. It’s not a product or an app. It’s a shared language that helps AI models and tools understand each other without confusion.

Let’s make it simple with an example from your living room.

MCP is like a universal remote for AI. One simple set of buttons that lets any smart model use any tool safely and smoothly.

MCP Masterclass - Full Course Index

Lesson 1. What is MCP . Model Context Protocol? ← You are here

Lesson 3. How MCP Actually Works . Hosts, Clients, and Servers

Lesson 4. The Three Superpowers of MCP . Tools, Resources, and Prompts

A Story You Already Know

Picture your living room on movie night.

You have four remotes: one for the TV, one for the sound bar, one for the lights, and one for the AC.

You grab the wrong one, point it the wrong way, and nothing happens.

Frustrating. Right?

Then you buy a universal remote. Now one controller manages everything. You press a button, and it knows which device to talk to.

That’s what MCP does.

AI tools used to be like that messy pile of remotes. Each spoke its own language. MCP gives them one shared language so every AI application can work with any tool.

How MCP Works (Plain English)

When an AI application uses MCP, three main parts work together:

1️⃣ Host - The Stage

The Host is where you interact with the AI model. It could be a chat window, IDE like Cursor, or even a voice app. It’s the place where everything happens.

2️⃣ Client - The Interpreter

Inside the Host is the Client. Think of it as a translator.

When the AI model says ‘I need to check the weather,’ the Client translates that into MCP language and talks to the Server to get the answer.

3️⃣ Server - The Toolbox

The Server is like a toolbox that holds the real tools. One Server can have many tools inside it - like ‘get weather,’ ‘get forecast,’ and ‘get air quality.’ Each Server waits for any AI application that speaks MCP to ask for its tools.

When the AI model says “I need to check the weather,” the Client translates that into MCP language and talks to the Server to get the answer.

Now, imagine this flow:

You type: “What’s the temperature in San Francisco?”

The Host receives your question and sends it to the AI model.

The AI model thinks: “I need weather data” and asks for the get_weather tool.

The Client translates this request into MCP language and asks the Server.

The Server runs the tool and returns: {temperature: 22, conditions: “sunny”}.

The Client sends this data back to the AI model.

The AI model creates a friendly answer: “It’s 22°C and sunny in San Francisco.”

The Host shows you the answer.

You don’t see the wiring. However, everything works smoothly because they all speak, interpret and understand MCP language.

The Three Building Blocks of MCP

1. Tools - Actions

Actions that perform or change something.

Examples: download a file, run code, send an email, write a post, etc..

2. Resources - Data to Read

Data sources the AI model can look at but not modify.

Examples: company handbook, spreadsheet, documentation snippet, weather data, content swipefile, etc..

3. Prompts - Instructions

Instruction sets or templates that help an AI application start a task.

Examples: a “Code Review Mode” prompt that reminds the model what to check.

These three make MCP more than a connector. It’s a structured system with safety rules and reusable parts.

Why MCP Matters?

Let’s look at what came before it.

If you had 3 AI applications and 3 tools, each application had to build custom code for each tool separately. That’s 9 custom integrations to build and maintain. Add one more tool, and it jumps to 12. Each integration had its own bugs and headaches.

With MCP, it’s simpler. Each AI application learns to speak MCP once. Each tool learns to speak MCP once. Now it’s 3 + 3 = 6 implementations. Clean and scalable.

Add a new tool? Plug it in.

Add a new AI? It already speaks the language.

That’s why companies like Anthropic (Claude Desktop), Cursor, and OpenAI are using MCP. It standardizes communication between AI applications, AI models, and the tools.

Common Questions

Q: Can the AI model run dangerous commands through MCP?

A: Good MCP applications will always ask your permission first before running anything risky. You stay in control of what gets executed.

Q: Where do these tools live?

A: On MCP Servers. They can be on your machine or hosted online.

Q: Is MCP just for developers?

A: No. It’s for anyone who wants AI to connect with other services without rebuilding everything from scratch.

Your Turn - Mini Exercise

Grab a pen. Write down three tasks you do manually every day: check emails, update a spreadsheet, post to Slack.

Now imagine a small “tool” doing each one. If your AI assistant could trigger those safely through MCP, how much time would that save?

That’s the kind of automation MCP enables.

Key Takeaways

MCP is a universal remote that lets AI applications safely connect to external tools and data.

It connects applications (Hosts) to tool servers through a standard language.

It reduces integration work from M × N to M + N.

It defines how AI applications use tools, read data, and follow prompts.

It keeps you in control through permissions and safety checks.

Closing Thought

Every major tech shift starts with a shared standard, example, like USB for hardware or HTTP for the web. For AI applications, that standard is shaping up to be MCP for now (but may be something else in the future).

In the next lesson, we’ll explore why MCP had to exist in the first place and how the old “connect everything manually” approach created endless chaos for developers and AI application builders.

PS: If you learned something new, reply and tell me one task you wish your AI assistant could handle. Your idea might feature in the next demo.

Loved the content. I would gave this blog a title "Demystifying MCP" :)

The way in which the nuts and bolts of MCP along with explanation of each participant in MCP made it super clear.

The remote analogy shared here makes perfect sense. It is the first time someone giving a different (and 100% relatable) analogy for MCP. Otherwise, I would see everyone using the USB Type-C analogy :)

Keep sharing the awesome stuff.

Hey, great read as alwys, I'm so curious about the specific mechanisms behind MCP's shared language for diverse AI interactions.